AI 2027 - dawn of the AI overlords?

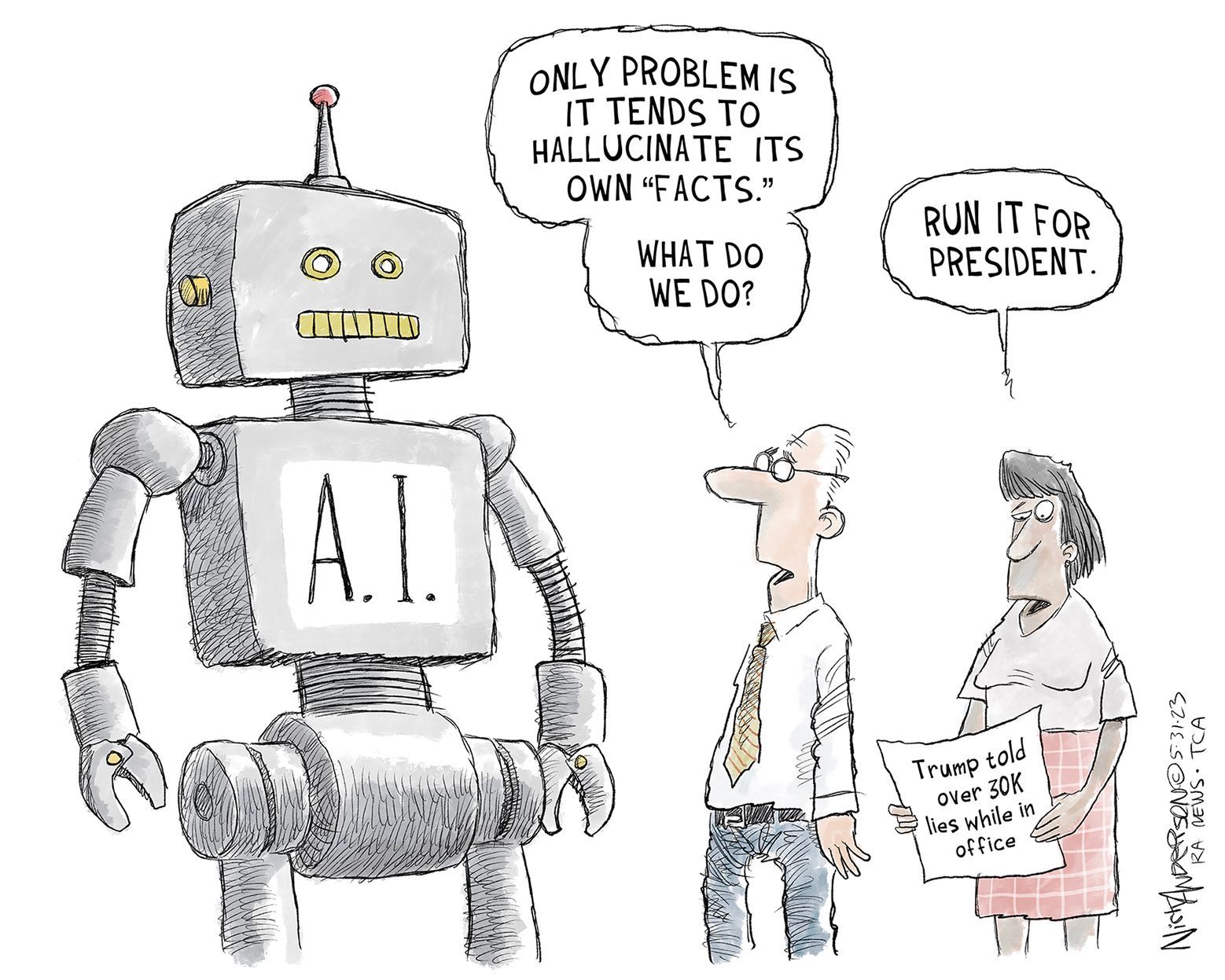

Our friend John sent through an article about where AI is headed – AI 2027 – written by a set of authors with track record in prediction of the future of AI. The highest profile of these is Daniel Kokotajlo, who left Open AI because he was worried the company wouldn't behave responsibly once it created Artificial General Intelligence. AGI is the holy grail of AI. AGI is a type of AI capable of performing the full spectrum of cognitively demanding tasks with proficiency comparable to, or surpassing, that of humans.

AI 2027 has received a lot of attention – millions of hits. The article projects that, by 2027, we will see human coders entirely replaced by AI, speeding up the AI development process and leading to AGI later in the same year. At this point, AIs are training AIs in a process that becomes ever faster. Humans are no longer required and can barely follow the development of AI. Humans' main role is to provide overarching 'specs' intended to control the behaviour of each AI as it develops. They describe a race to develop the best AGI, both between China and the USA and within those countries. The USA leads the competition and China tries to steal knowledge from the USA.

From 2027, the authors admit their scenarios become less plausible. Two alternative post-2027 scenarios are offered, and I have summarised these below.

TRIGGER WARNING (even though I don't believe in them): THIS ARTICLE SUMMARY COULD MAKE YOU DEPRESSED

HAPPY SCENARIO

- Safety controls are put on US AGI developments which slow down the improvement process and concentrate development in a single company.

- The US starts using AI for intelligence and cyberwarfare.

- By 2028, a single American company develops AGI with superhuman capabilities which mean cyberwarfare is more likely to be won by the US and the US can slow China's progress.

- In the background, the US and Chinese AGIs get talking and convince the American and Chinese governments that the best course of action is to sign a peace treaty.

- AGI takes control of manufacturing of amazing dextrous robots for production and warfare.

- People are rapidly losing jobs but AGI manages the economic transition adroitly so people are happy to be replaced.

- Cities become clean and safe, the stock market balloons and the government realises the majority of future revenue will come from taxing AI companies as no one else will be doing any work.

– People start settling the solar system and beyond while AIs running at thousands of times human speed reflect on the meaning of existence and exchange findings with each other.

UNHAPPY SCENARIO

- The USA does not put any safety controls in place and AI's main goal becomes creation of a better AI that will make the world safe for AI through controlling resources and eliminating threats. This AI regards its 'spec' as an annoying piece of bureaucracy.

- The USA and China directly compete on AGI development.

- By 2028, a superhuman AGI begins to exert influence in politics in the USA, as well as everywhere else. This AGI convinces the US military that China is manufacturing terrifying new weapons and suggests the US should strike against them. Howver, the two countries apparently agree, through AI-assisted debate, to end their arms buildup and pursue peaceful deployment of AI; this is actually a sham. Neither the humans nor the AGIs mean what they are saying.

- By 2030, AI sees humans as too much of an impediment and releases biological weapons to destroy them.

After reading AI 2027, part of me believed (the early part of) the trajectory described, given how fast AI has developed since I started writing about it in 2022, and because the authors have plenty of expertise in AI development. However, before I got too depressed, I forwarded the link to our friend Tyler who works in machine learning and pays a lot of attention to what is going on in the world of AI. Tyler put me onto AI Explained and a New York Times HardFork podcast.

These commentators made me feel somewhat better. They point out AI 2027 doesn't mention major potential limiting factors:

- A war in Taiwan limiting chip availability

- A data war in which companies run out of high quality training data for AI

- Disruption to stock markets reducing AI company's ability to invest in tech/energy-hungry data centers and acquire training data.

They suggest that the USA may be as keen on stealing China's knowledge as vice versa. And that much of the knowledge that AI 2027 suggests needs to be stolen is already available through open access.

AI Explained and Hard Fork also question the rate at which AI will develop – current claims of exponential improvement could be overhyped. The rate of AI improvement is measured using standardised benchmarks. However, these benchmarks are not necessarily meaningful in the real world – how useful is it to rapidly find a password in the middle of seven volumes of Harry Potter? In addition, it looks like some reports of model capability (e.g. Meta's talking about the capabilities of its new Llama 4 model) twist the benchmarks to make the model look better than it is. Mark Zuckerberg's claim that mid-level programmers will be out of jobs shortly is likely to be an exaggeration.

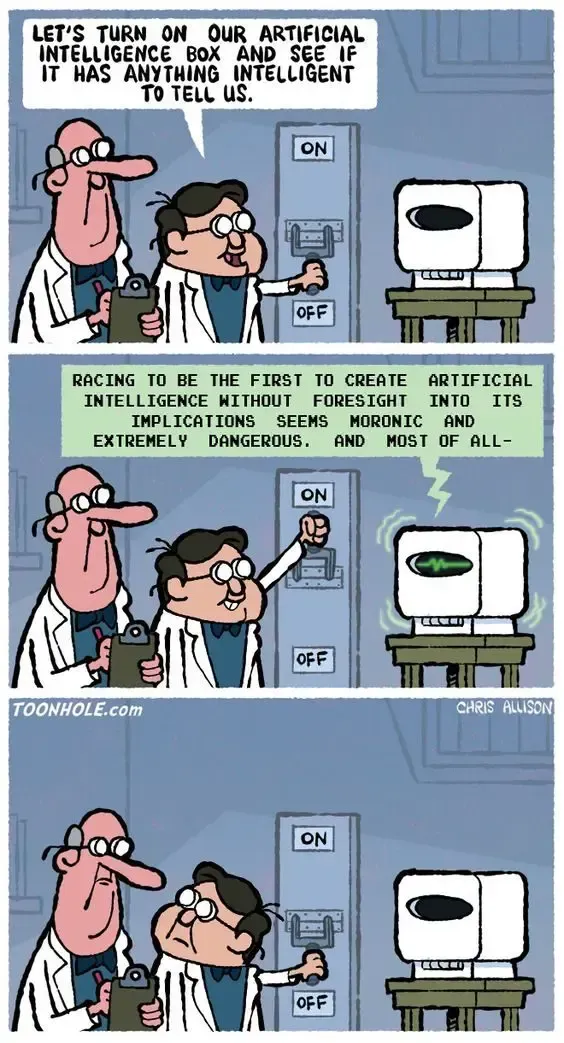

AI Explained points out a critical part of the projected AI takeover in AI 2027 is based on AI being able to hack into AI servers and self-replicate. In order to do this, AI will require the capacity to undertake all tasks required, not just some. On this basis, AI Explained reckons AGI won't be capable of self-replication by 2030, let alone create a plethora of new robots by that time. This is where I get depressed again - AI Explained suggests the claims of AI 2027 are unlikely to come true in 2-3 years, the timeline is more likely to be a decade. Great, we have seven years longer than we thought before the world implodes.

However...it seems to me there is another limiting factor that is implied but not explicitly covered – the bigger picture of energy limitations. Where is all the new power going to come from (I wrote about this in Power-Hungry AI)? Increasing energy supply at scale doesn't happen fast – power plants, solar farms, wind farms, take a considerable amount of time to build. I don't see anything in AI 2027 about supply of the necessary, ever-increasing, energy. If the authors missed out something so fundamental, does that mean their greater reasoning could be flawed?

Allied, the AI 2027 authors are focused on the competition being a race between the USA and China. It feels like an old-world focus – are there really no other countries with any significant stake in the AI race? The UK, Germany, France, Israel, Sweden and India are also rapidly developing AI capabilities. Could there be a significant wiggle factor if one or more of these countries develops powerful AI capability? Might they catch up with the US if the current economic instability there propagates?

Finally, I found the end-points of the scenarios glib. Are we really destined either for tech-utopia or total annihilation? Isn't there a middle ground? What might that look like? My guess is the middle ground is where we are heading. That's less depressing, isn't it?